Java El Capitan

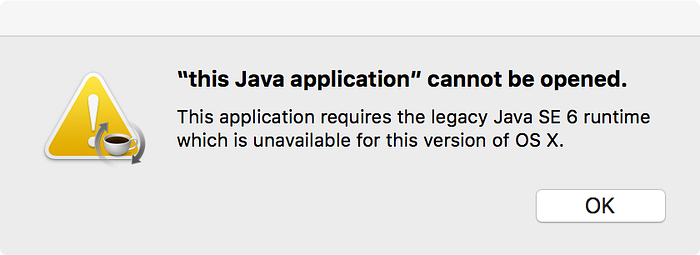

During the upgrade process to Mac OS X 10.10 Yosemite or 10.11 El Capitan, Java may be uninstalled from your system. In order to reinstall and run PDF Studio you will need to install the Java 6 Runtime again.

My previous post about installing Java on OS X received quite a bit of traffic, and I felt that some of the information was outdated, and it became a little unclear after all the edits, so I’ve endeavoured to create a much easier to understand set of instructions.

- Sep 26, 2017 Follow these instructions to uninstall Java from El Capitan & Sierra. Go to the root Library folder in Library/Internet Plug-Ins and delete everything related to Java. To access your root Library folder, open Finder and click on the “Go” menu at the top. Hold down the “Alt” key (also known as the “Option” key) and you will see the.

- 'Java for OS X 2015-001 installs the legacy Java 6 runtime for OS X 10.11 El Capitan, OS X 10.10 Yosemite, OS X 10.9 Mavericks, OS X 10.8 Mountain Lion, and OS X 10.7 Lion. This package is exclusively intended for support of legacy software and installs the same deprecated version of Java 6 included in the 2014-001 and 2013-005 releases.

- Jun 09, 2015 Hi guys, I noticed I was getting a lot of traffic, so I updated my post for the latest version of Java, and added a note for El Capitan's Rootless mode down the bottom. I think it would be better to recommend disabling Rootless mode and to restart manually.

The instructions for installing the JDK (Java Development Kit) are a subset of the instructions for installing just the JRE, because Oracle provides an installer for the JDK.

- Go to the Oracle Java downloads page and download the JDK installer. You should end up with a file named something similar to

jdk-8u60-macosx-x64.dmg, but perhaps a newer version. - Open the

.dmgDisk Image and run the installer. - Open

Terminal - Edit the JDK’s newly installed

Info.plistfile to enable the included JRE to be used from the the command line, and from bundled applications:The third line fixes a permissions issue create by using

defaults write. The fourth line is not required, but makes the file more user-friendly if you open it again in a text editor. - Create a link to add backwards compatibility for some applications made for older Java versions:

- Optional: If you’re actually using the JDK for software development, you may want to set the

JAVA_HOMEenvironment variable. The recommended way is to use/usr/libexec/java_homeprogram, so I recommend settingJAVA_HOMEin your.bash_profilelike Jared suggests:This will get the latest installed Java’s home directory by default, but check out

man java_homefor ways to easily get other Java home directories.

You should be done now, so try and open the application. If it does not work, some applications require legacy Java 6 to be installed. You can have it installed without actually using it, however, it’s possible to trick the applications into thinking legacy Java 6 is installed, without actually doing so.

If you’re running El Capitan, this is actually a little difficult now, because Apple added SIP (System Integrity Protection) to OS X. I have written a post explaining how to disable and enable SIP.

If you’ve disabled SIP, or are not running El Capitan yet, you can trick some applications into thinking legacy Java 6 is installed by creating two folders with the following commands in Terminal:

Don’t forget to enable SIP again after creating these directories.

This tutorial contains step by step instructions for installing hadoop 2.x on Mac OS X El Capitan. These instructions should work on other Mac OS X versions such as Yosemite and Sierra. This tutorial uses pseudo-distributed mode for running hadoop which allows us to use a single machine to run different components of the system in different Java processes. We will also configure YARN as the resource manager for running jobs on hadoop.

Hadoop Component Versions

- Java 7 or higher. Java 8 is recommended.

- Hadoop 2.7.3 or higher.

Hadoop Installation on Mac OS X Sierra & El Capitan

Java El Capitan Free

Step 1: Install Java

Hadoop 2.7.3 requires Java 7 or higher. Run the following command in a terminal to verify the Java version installed on the system.

Java(TM) SE Runtime Environment (build 1.8.0_121-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.121-b13, mixed mode)

If Java is not installed, you can get it from here.

Step 2: Configure SSH

When hadoop is installed in distributed mode, it uses a password less SSH for master to slave communication. To enable SSH daemon in mac, go to System Preferences => Sharing. Then click on Remote Login to enable SSH. Execute the following commands on the terminal to enable password less login to SSH,

Step 3 : Install Hadoop All star yakyuken battle psp downloads.

Download hadoop 2.7.3 binary zip file from this link (200MB). Extract the contents of the zip to a folder of your choice.

Step 4: Configure Hadoop

First we need to configure the location of our Java installation in etc/hadoop/hadoop-env.sh. To find the location of Java installation, run the following command on the terminal,

Copy the output of the command and use it to configure JAVA_HOME variable in etc/hadoop/hadoop-env.sh.

Modify various hadoop configuration files to properly setup hadoop and yarn. These files are located in etc/hadoop.

etc/hadoop/core-site.xml

etc/hadoop/hdfs-site.xml

etc/hadoop/mapred-site.xml

etc/hadoop/yarn-site.xml

Note the use of disk utilization threshold above. This tells yarn to continue operations when disk utilization is below 98.5. This was required in my system since my disk utilization was 95% and the default value for this is 90%. If disk utilization goes above the configured threshold, yarn will report the node instance as unhealthy nodes with error 'local-dirs are bad'.

Mod map ets2. Step 5: Initialize Hadoop Cluster

From a terminal window switch to the hadoop home folder (the folder which contains various sub folders such as bin and etc). Run the following command to initialize the metadata for the hadoop cluster. This formats the hdfs file system and configures it on the local system. By default, files are created in /tmp/hadoop-<username> folder.

It is possible to modify the default location of name node configuration by adding the following property in the hdfs-site.xml file. Similarly the hdfs data block storage location can be changed using dfs.data.dir property.

The following commands should be executed from the hadoop home folder.

Step 6: Start Hadoop Cluster

Run the following command from terminal (after switching to hadoop home folder) to start the hadoop cluster. This starts name node and data node on the local system.

To verify that the namenode and datanode daemons are running, execute the following command on the terminal. This displays running Java processes on the system.

29219 Jps

19126 NameNode

19303 SecondaryNameNode

Step 7: Configure HDFS Home Directories

We will now configure the hdfs home directories. The home directory is of the form - /user/<username>. My user id on the mac system is jj. Replace it with your user name. Run the following commands on the terminal,

Step 8: Run YARN Manager Delphi 2015.1 keygen.

Start YARN resource manager and node manager instances by running the following command on the terminal,

Run jps command again to verify all the running processes,

Java Mac Os X El Capitan

29283 Jps

19413 ResourceManager

19126 NameNode

19303 SecondaryNameNode

19497 NodeManager

Step 9: Verify Hadoop Installation

Access the URL http://localhost:50070/dfshealth.html to view hadoop name node configuration. You can also navigate the hdfs file system using the menu Utilities => Browse the file system.

Access the URL http://localhost:8088/cluster to view the hadoop cluster details through YARN resource manager.

Step 10: Run Sample MapReduce Job

Hadoop installation contains a number of sample mapreduce jobs. We will run one of them to verify that our hadoop installation is working fine.

We will first copy a file from local system to the hdfs home folder. We will use core-site.xml in etc/hadoop as our input,

Verify that the file is in HDFS folder by navigating to the folder from the name node browser console.

Let us run a mapreduce program on this hdfs file to find the number of occurrences of the word 'configuration' in the file. A mapreduce program for word count is available in the hadoop samples.

This runs the mapreduce on the hdfs file uploaded earlier and then outputs the results to the output folder inside the hdfs home folder. The file will be named as part-r-00000. This can be downloaded from the name node browser console or run the following command to copy it to the local folder.

Print the contents of the file. This contains the number of occurrences of the word 'configuration' in core-site.xml.

Finally delete the uploaded file and the output folder from hdfs system,

Step 11: Stop Hadoop/YARN Cluster

Run the following commands to stop hadoop/YARN daemons. This stops name node, data node, node manager and resource manager.